ChatGPT and Bard are artificial intelligence language models from OpenAI and Google, respectively. While ChatGPT is already adding disruptive powers to the internet, Bard is considered as scary good by some, while others think it’s no match to the brilliance of ChatGPT’s design. Yet Google lost a $100 Billion in market cap because of a snafu during its Bard demo.

Artificial intelligence will increasingly play an important role in our lives. In fact we have looked at AI’s potential on this very site for over a decade. Slick interfaces like ChatGPT however have suddenly made AI consumer-accessible. While many would be scared, I do not think it is a bad thing.

So how does Bard stack up against ChatGPT? Are the days of humans creating content over?

In a quest to answer the above questions, let’s analyze the basic principles behind these two platforms and try to estimate their upcoming impact on our lives.

The AI basics

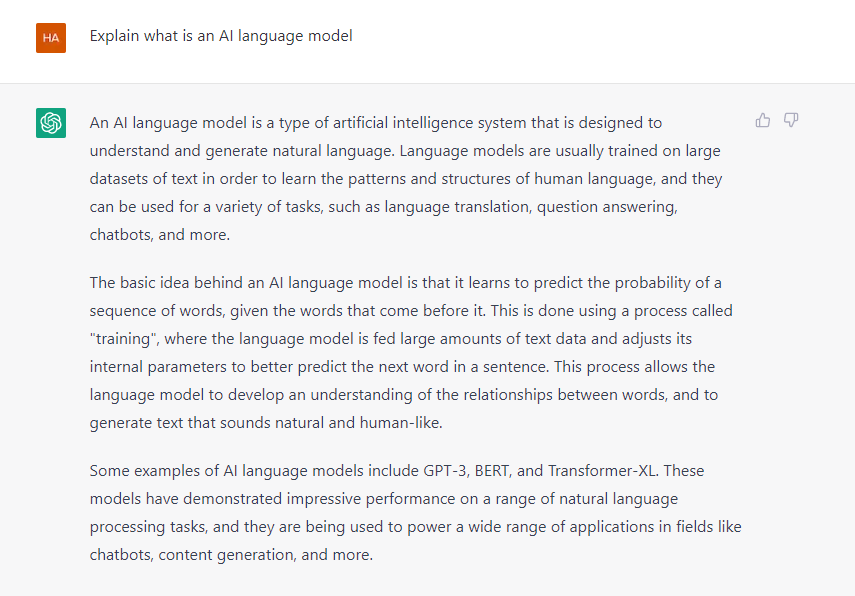

I’ve mentioned that both ChatGPT and Bard are AI language models. But what does a language model mean? I asked the same question to ChatGPT, and here is how it explained it.

“An AI language model is a type of artificial intelligence system that is designed to understand and generate natural language.”

“The basic idea behind an AI language model is that it learns to predict the probability of a sequence of words, given the words that come before it. This is done using a process called “training”. This process allows the language model to develop an understanding of the relationships between words, and to generate text that sounds natural and human-like.”

This is a pretty decent definition of the subject, in my opinion. But to make it a little accessible, let’s take a look at an example.

When you’re using the keyboard to type something on your phone, and you type a word, the keyboard recommends what you may write next and shows you that word above the keyboard interface. The keyboard app does some calculations internally and makes the prediction of what you would want to type next or what would be the most probable word that would appear next in the sentence.

This is essentially how the AI language models work. The difference between your keyboard and ChatGPT is that your keyboard usually just looks at the English grammar and your previous history to predict the next word based on the last word you typed. And on the other hand, ChatGPT has been “trained” on the entire internet with about 175 billion + parameters. In fact, Elon Musk confirmed that until recently OpenAI also had exclusive access to Twitter databases, going beyond what even someone like Google gets by simply crawling Twitter.

All of this raw data makes ChatGPT better at predicting and generating human-like text from short inputs.

So, when you input a text or ask it a question. The Algorithm first breaks it down into individual words or clusters of words.

It analyzes the sequence of the words, the order in which they appear in your text, and the inter-relationship between those words to gain an understanding of the context and the desired output. And it then gives you the response. Based specifically on the input text.

A good language model is good for reading a piece of text and summarizing it, manipulating it in the way you want, and answering questions about the text. Basically, all the language processing we do naturally, a good language model would be able to replicate that.

Let’s move to the meat of the matter and see what the fuss is all about.

ChatGPT

Launched on November 30, 2022, for the general public, ChatGPT is based on the GPT3, a language model that was released in 2020 by OpenAI, the same company behind ChatGPT and Dall-E.

As soon as it hit the market, people went crazy over how good it was at answering questions and solving complicated problems.

It was answering general queries, philosophical questions, coding problems, generating text and data sets, writing code blocks, writing creative articles/stories/poems, imitating other people, and it was handling a variety of other queries with nuanced answers.

The tech community went nuts over it, and it was continuously trending on Twitter for days.

It took only 5 days to reach 1 million users. And it is at the number on the list of apps by the time it took them to reach the first 100m users. 2 months in the case of ChatGPT. By comparison, the closest competitor, TikTok took 9 months to reach 100m users.

It is radically different and more useful than everything we’ve seen so far. Experts argue that it has the potential to change how the internet works, and it is already on the way to wiping many jobs from existence.

Microsoft was quick to start investing in OpenAI, the company responsible for the existence of GPT3 and ChatGPT. And it has plans to incorporate it into its own search engine product: Bing. The test version of this is currently out for some users.

Even though the idea of the existence of a next-gen query-answering product threatens the large business share of Google, the entry of Microsoft in the story made it aware that the enemy is getting more powerful and something must be done about it.

Enter Bard

On 6th February 2023, Google’s CEO Sundar Pichai published a blog titled “An important next step on our AI journey“ to introduce Bard. Google’s own take on the AI language model.

Google is not new to the language models. Two years ago, the company announced LaMDA, abbreviated from Language Model for Dialogue Applications. Based on the GPT3 and Bert.

Google says Bard is a lightweight version of LaMDA. Working on TPUs which are faster and more efficient for AI applications.

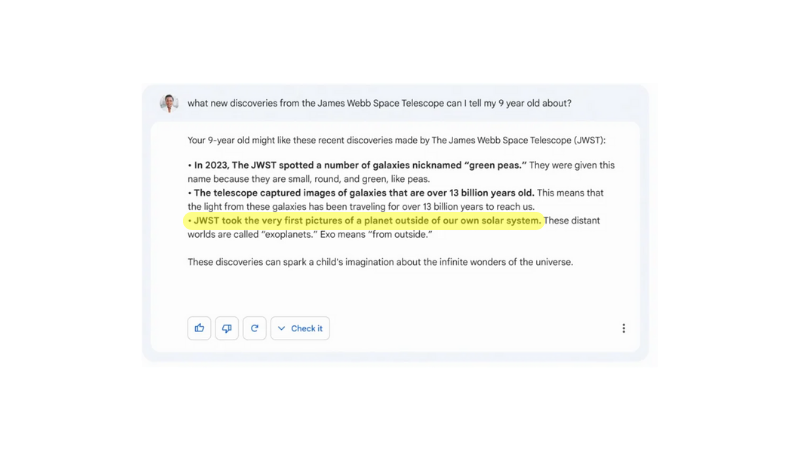

So, Google released an advertisement asking a question to Bard. To show the capabilities of the new system. But the public was quick to locate the mistake in the answers to the advert. This resulted in about a 7% decline in Alphabet’s (Google’s parent company) stocks, wiping a value of about $100 billion.

A few months ago, one of the engineers from Google claimed that the LaMDA is too good, and they thought it was sentient. Also, ChatGPT regularly makes silly and obvious mistakes time-to-time.

Here are some examples: Basic Math, Factual Errors, Wrong Abbreviations

And because we don’t yet have access to Bard yet, it’s hard to judge which is better or worse in comparison with one another.

We also need to consider that Google has vastly more data and processing power than ChatGPT. It would be interesting to test Bard when it releases for the general public. Also, every search query doesn’t have to be inside the chat interface. There are still some areas, like news and solid facts, that are hard to replace with chatbots.

Also, several questions arise for the future of Google’s revenue system, how creators who serve as the input to these AI models will get compensated, how the copyright laws would be shaped around this, and what happens when AI-generated content feeds itself. Only time will answer these questions, and I’m eagerly waiting for that time.

As mentioned earlier, Bard is based on TPUs or tensor processing units. Which are hardware accelerators specifically designed for Artificial Intelligence tasks. And are faster and more efficient than the GPUs that ChatGPT uses. This should mean that under the same conditions, Bard would be faster and should require lower operating and maintenance costs than ChatGPT.

But the conditions are not the same. And we’re yet to test Bard. Until then, ChatGPT seems like winning this race. But there is plenty of room for Google to improve, and it has a competitive advantage of a larger audience than Microsoft’s Bing and ChatGPT. Let’s see how the technology war moves from here and who wins this one.